ATSS3D: Adaptive Threshold for Semi-Supervised 3D Object Detection / under review at ICCV 2025

Nov 16, 2024· ,,,·

0 min read

,,,·

0 min read

Yu Chen

Zicheng Zeng

Nuo Chen

Yong Du

Huaidong Zhang

Abstract

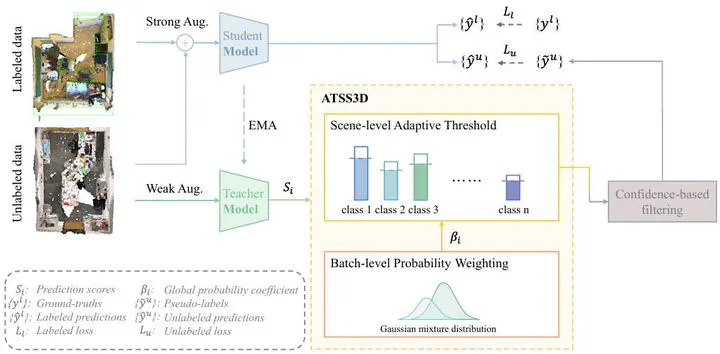

Semi-supervised 3D object detection leverages unlabeled data which effectively solve the challenge of substantial time and energy costs required for labeling large-scale datasets. Existing pseudo-labeling based method generate high-quality pseudo labels for unlabeled data through a teacher network, and use a student network for joint training to improve detection performance and generalization ability. In this process, a threshold is manually set to a fixed value to filter generated pseudo labels, in order to prevent the inclusion of a large number of low-quality and erroneous predictions to the training set. However, fixed thresholds are hardly adaptable to the characteristics of different categories and scenarios, which may lead to uneven quality of pseudo labels. To address this issue, we propose ATSS3D, a probabilistic decision adaptive threshold for semi-supervised 3D object detection method based on learned states. Specifically, ATSS3D introduces a scene-level adaptive threshold to flexibly handle unlabeled data utilization based on the class frequencies of current scene. Additionally, we introduce a batch-level probability weighting mechanism to estimate confidence distributions for each class, enabling adaptive threshold filtering according to the model’s performance on the current batch. Finally, we dynamically adjusts the thresholds using class prediction scores, enabling the adaptive threshold function to better reflect the class distribution characteristics in each training step. Our experiments on the ScanNet and SUN RGB-D benchmark datasets show that ATSS3D significantly improves the performance of current semi-supervised 3D object detection methods, especially with small amounts of labeled data.

Type